DPO vs. RLHF

DPO

Andrew Ng's quote 👇

- It has been half a year since DPO proposed (Submitted on 29 May 2023 (v1)), and, in LLM leaderboard, most top methods are based on DPO.

-

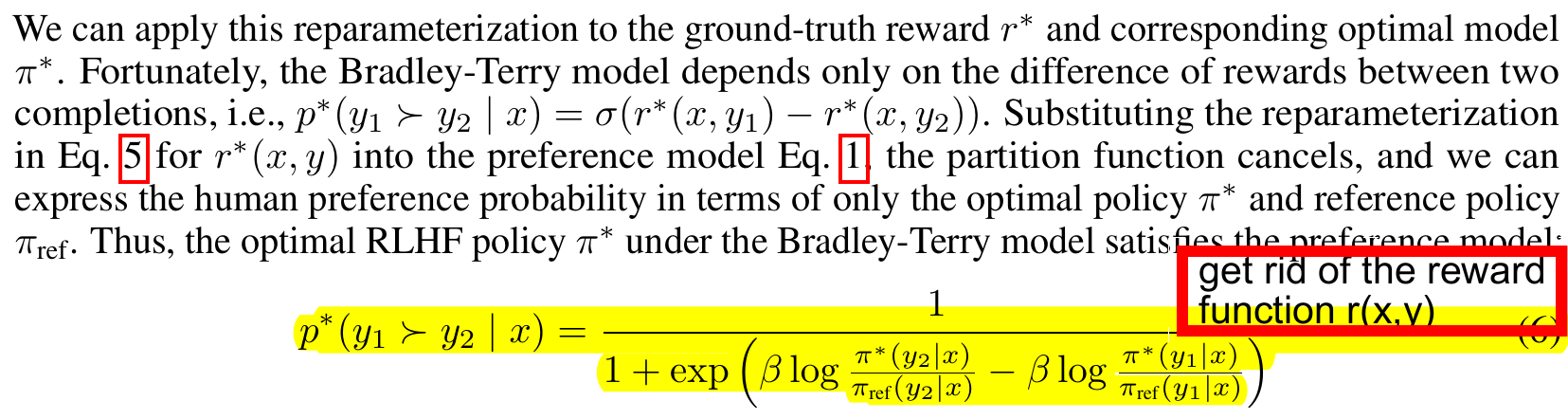

In this paper we introduce a new parameterization of the reward model in RLHF that enables extraction of the corresponding optimal policy in closed form, allowing us to solve the standard RLHF problem with only a simple classification loss.

-