Mamba 2

Transformers are SSMs: Generalized Models and Efficient Algorithms Through Structured State Space Duality

Okay, it is not mamba 2 but a theory

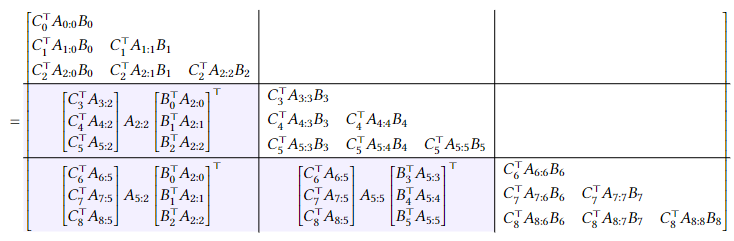

This paper mainly focuses on proofing Attention Mechanism is Tensor Contraction; and by doing so, they bring the transformer architecture and SSM together.( I think...

I always think Attention is the process of taking two tensors to generate a scalar and using the scalar to access the relationship of these two. Tensor Contraction is a more generalized summary.

There are some other contributions, such as,

Decomposing the Low-Rank Block. Make it more efficiently.

And ... I am not fully read the paper actually...