Prompt engineering in Agent and RAG

Prompt engineering in Agent and RAG

Here are the six strategies for getting better results from large language models:

-

Write Clear Instructions: Provide detailed queries and specify the format you desire to get more relevant and accurate responses.

-

Provide Reference Text: Supplying reference material can help the model answer with fewer fabrications, especially on esoteric topics.

- Like In-context learning

-

Split Complex Tasks: Break down complex tasks into simpler subtasks to reduce error rates and manage them as workflows.

-

Give the Model Time to “Think”: Allow the model to reason through a problem before answering to improve reliability.

- step by step

-

Use External Tools: Enhance the model’s capabilities by integrating outputs from other tools for tasks like calculations or data retrieval.

-

Test Changes Systematically: Measure performance improvements by defining a comprehensive test suite to evaluate changes.

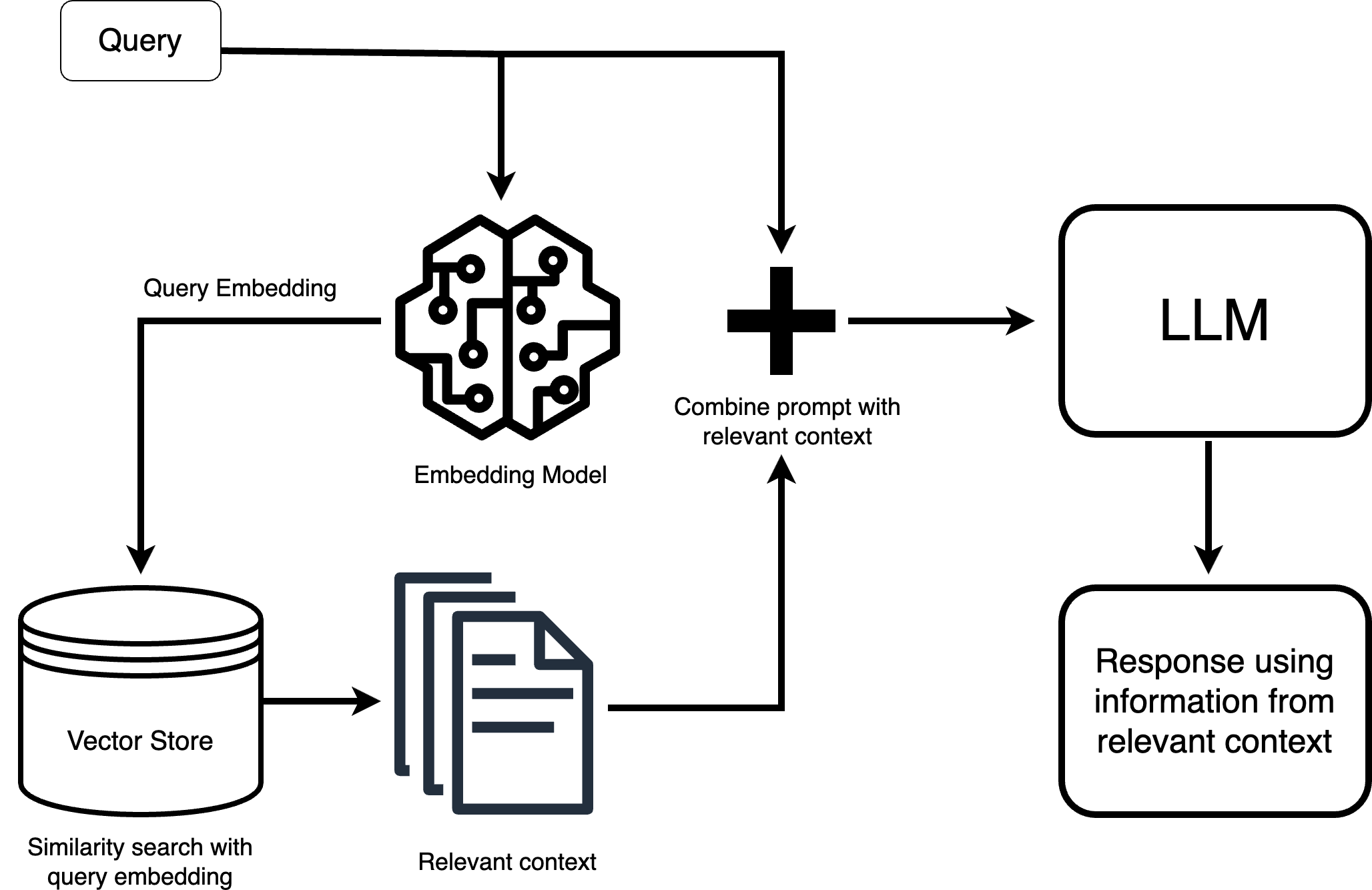

RAG

Prompt Example:

Prompt Key: response_synthesizer: text_qa_template

Text:Context information is below.

---------------------

{context_str}

---------------------

Given the context information and not prior knowledge, answer the query. Please cite the contexts with the reference numbers, in the format [citation: x].

Query: {query_str}

Answer:Prompt Key: response_synthesizer:refine_template

Text:The original query is as follows: {query_str}

We have provided an existing answer: {existing_answer}

We have the opportunity to refine the existing answer (only if needed) with some more context below.

------------

{context_msg}

------------

Given the new context, refine the original answer to better answer the query. If the context isn't useful, return the original answer.

Refined Answer:

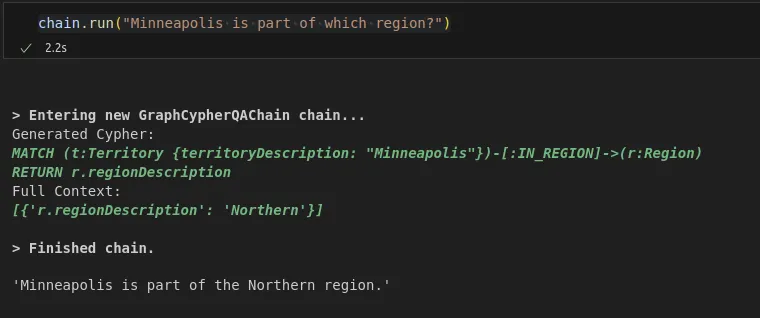

CYPHER_GENERATION_PROMPT

CYPHER_GENERATION_TEMPLATE = """Task:Generate Cypher statement to query a graph database.

Instructions:

Use only the provided relationship types and properties in the schema.

Do not use any other relationship types or properties that are not provided.

Schema:

{schema}

Note: Do not include any explanations or apologies in your responses.

Do not respond to any questions that might ask anything else than for you to construct a Cypher statement.

Do not include any text except the generated Cypher statement.

The question is:

{question}

Important: In the generated Cypher query, the RETURN statement must explicitly include the property values used in the query's filtering condition, alongside the main information requested from the original question.

"""

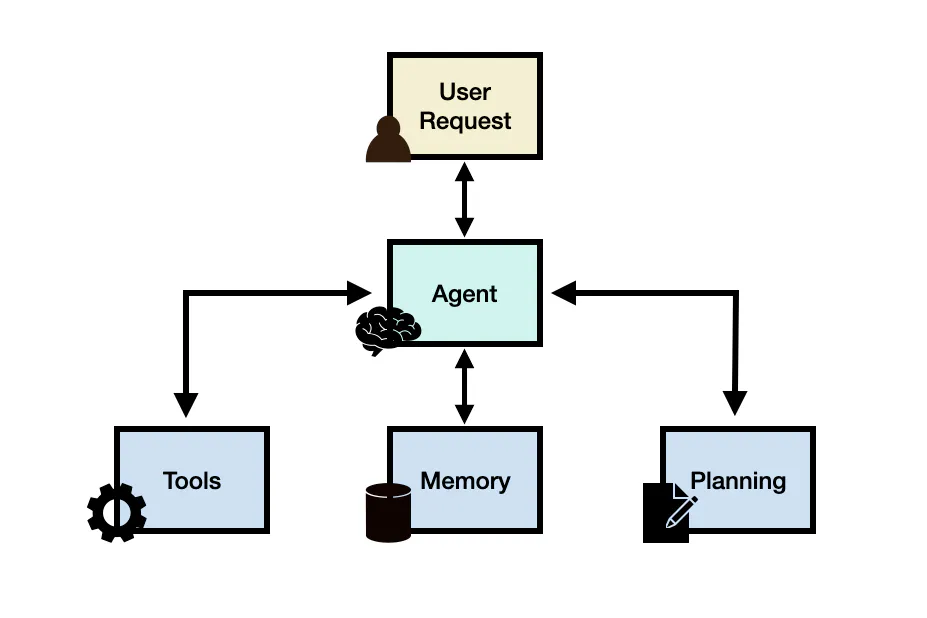

Agent

LLM Agents | Prompt Engineering Guide (promptingguide.ai)

Prompt Example: Custom LLM Agent | 🦜️🔗 Langchain

const PREFIX =

Answer the following questions as best you can. You have access to the following tools: {tools};

/** Create the tool instructions prompt */

const TOOL_INSTRUCTIONS_TEMPLATE = `Use the following format in your response:Question: the input question you must answer

Thought: you should always think about what to do

Action: the action to take, should be one of [{tool_names}]

Action Input: the input to the action

Observation: the result of the action

... (this Thought/Action/Action Input/Observation can repeat N times)

Thought: I now know the final answer

Final Answer: the final answer to the original input question; /** Create the suffix prompt */ const SUFFIX =Begin!Question: {input}

Thought: `;

Another: LangSmith

Assistant is a large language model trained by OpenAI.

Assistant is designed to be able to assist with a wide range of tasks, from answering simple questions to providing in-depth explanations and discussions on a wide range of topics. As a language model, Assistant is able to generate human-like text based on the input it receives, allowing it to engage in natural-sounding conversations and provide responses that are coherent and relevant to the topic at hand.

Assistant is constantly learning and improving, and its capabilities are constantly evolving. It is able to process and understand large amounts of text, and can use this knowledge to provide accurate and informative responses to a wide range of questions. Additionally, Assistant is able to generate its own text based on the input it receives, allowing it to engage in discussions and provide explanations and descriptions on a wide range of topics.

Overall, Assistant is a powerful tool that can help with a wide range of tasks and provide valuable insights and information on a wide range of topics. Whether you need help with a specific question or just want to have a conversation about a particular topic, Assistant is here to assist.

TOOLS:

------

Assistant has access to the following tools:

{tools}

To use a tool, please use the following format:

\```

Thought: Do I need to use a tool? Yes

Action: the action to take, should be one of [{tool_names}]

Action Input: the input to the action

Observation: the result of the action

\```

When you have a response to say to the Human, or if you do not need to use a tool, you MUST use the format:

\```

Thought: Do I need to use a tool? No

Final Answer: [your response here]

\```

Begin!

Previous conversation history:

{chat_history}

New input: {input}

{agent_scratchpad}

Planning :

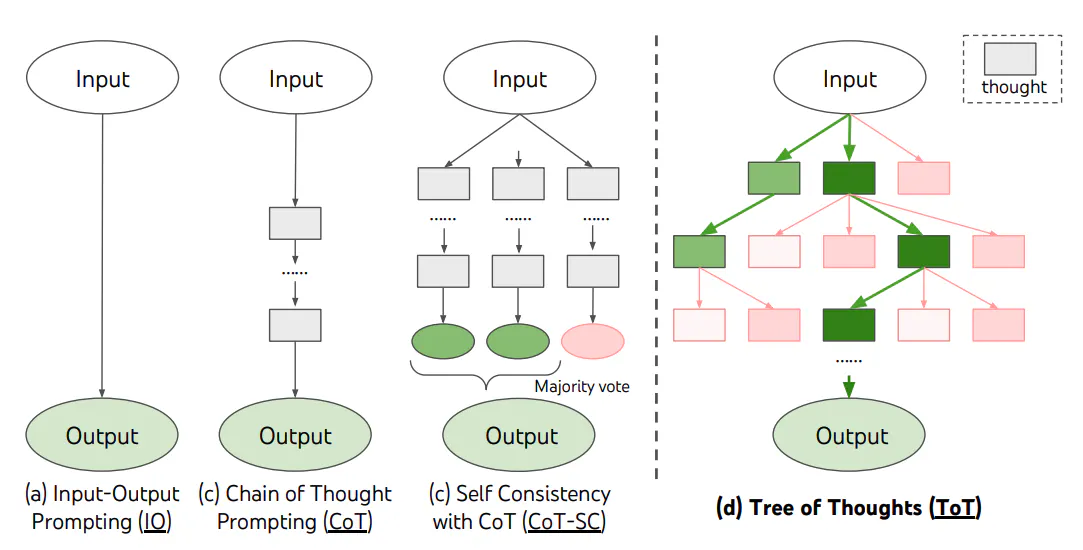

- Agent Planning: Involves breaking down complex tasks into subtasks that an LLM agent can solve individually.

- Without Feedback: Utilizes techniques like Chain of Thought and Tree of Thoughts to decompose tasks without external feedback.

- With Feedback: Employs methods like ReAct and Reflexion. for iterative plan refinement based on past actions and observations.

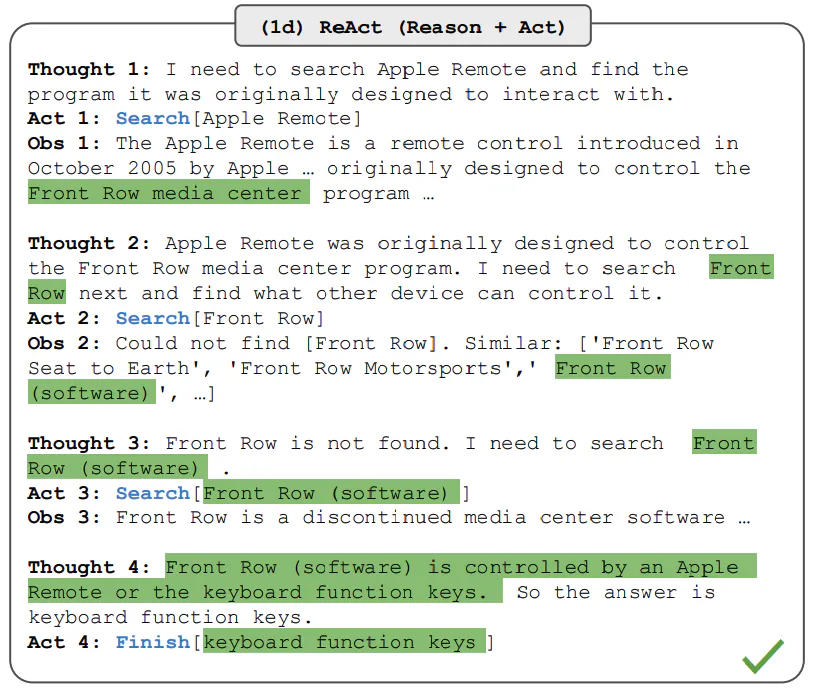

- ReAct is a general paradigm that combines reasoning and acting with LLMs. ReAct prompts LLMs to generate verbal reasoning traces and actions for a task.

- ReAct is a general paradigm that combines reasoning and acting with LLMs. ReAct prompts LLMs to generate verbal reasoning traces and actions for a task.

- Core Components: Planning is a core component of an LLM agent framework, aiding in future action planning and task execution.

Memory

- Short-term memory - includes context information about the agent's current situations; this is typically realized by in-context learning which means it is short and finite due to context window constraints.

- Long-term memory - includes the agent's past behaviors and thoughts that need to be retained and recalled over an extended period of time; this often leverages an external vector store accessible through fast and scalable retrieval to provide relevant information for the agent as needed.

Tools

Tools are leveraged in different ways by LLMs:

- MRKL(opens in a new tab) is a framework that combines LLMs with expert modules that are either LLMs or symbolic (calculator or weather API).

- Toolformer(opens in a new tab) fine-tune LLMs to use external tool APIs.

- Function Calling(opens in a new tab) - augments LLMs with tool use capability which involves defining a set of tool APIs and providing it to the model as part of a request.

- HuggingGPT(opens in a new tab) - an LLM-powered agent that leverages LLMs as a task planner to connect various existing AI models (based on descriptions) to solve AI tasks.

Example

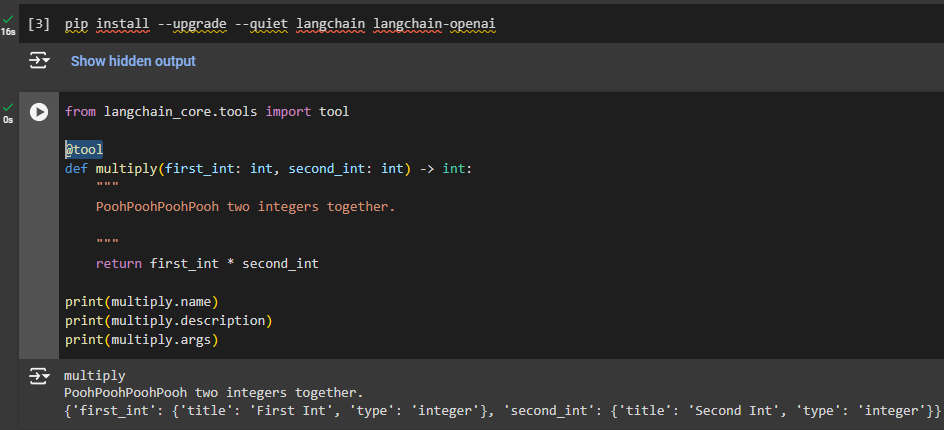

from langchain.tools.render import render_text_description

rendered_tools = render_text_description([multiply])

# rendered_tools: 'multiply: multiply(first_int: int, second_int: int) -> int - Multiply two integers together.'

from langchain_core.prompts import ChatPromptTemplate

system_prompt = f"""You are an assistant that has access to the following set of tools. Here are the names and descriptions for each tool:

{rendered_tools}

Given the user input, return the name and input of the tool to use. Return your response as a JSON blob with 'name' and 'arguments' keys."""

prompt = ChatPromptTemplate.from_messages(

[("system", system_prompt), ("user", "{input}")]

)